A Creativity Index for Scientists.

Oleg Matveev

Abstract

A dominant in modern science reward and ranking system is highly subjective and inaccurate. Auxiliary to it are two semi-objective methodologies, which in some way attribute certain numerical value to the individual rank of scientists, based on the number publications and/or the citation index and its derivatives. Those two approaches have many known drawbacks and are therefore often considered as impractical and useless. In this essay a third semi-objective, potentially more precise system is suggested. In essence it assigns a numerical value to the rank which is derived from the all known information about novel, nonobvious, and proven useful ideas that personally brought forward by a scientist. Scientists will submit their personal summaries and assessments of their ideas and uses to an open Internet portal-agency. From this information scientist, themselves or with help of an agency, will calculate the applicant’s rank or/and creativity index. Colleagues will only be asked to provide refuting evidence if there are some discrepancies or incorrect statements in the application without verifying it.

Science is one of the most important social institutions of modern society. The further prosperity of the most developed countries in the world largely depends on the efficiency of their scientific enterprise and how well scientific research is organized, managed and funded [1]. There are many signs that in the 21st century the pace of scientific progress has begun to slow and that the productivity of scientists is declining. More and more scientific journals are flooded with science of marginal value and even with false research findings [2], (see also http://www.ma.utexas.edu/users/mks/statmistakes/StatisticsMistakes.html ). In recent years countless ideas and concepts about how to improve the quality and efficiency of the modern scientific enterprise have been put forth by scientists at all levels of the scientific hierarchy (see, for example [1,3-9]).

An important constituent of the current science research organization and management model is a reward and ranking system (RRS). The future of science depends heavily on the honesty and fairness of intellectual competition, and on the ability of the RRS to “identify and promote individuals who have the creativity and intellectual independence” [10]. In modern science the RRS is to a great degree subjective, i.e. at the top scientists themselves evaluate performance and rewards for each other, while abilities of researchers at the bottom is evaluated by established scholars. As in any subjective system, the rank and reward of scientists often depends on numerous extraneous and irrelevant factors [5, 7, 11-14]. Perhaps, the most significant and primary reason why modern science should move from an almost entirely subjective RRS to more objective system is the emergence of insidious tendency: less money available for research and greater difficulty in funds acquisition gradually is leading scientists to adopt less moral, decent, and honorable reasoning in their everyday lives. This phenomenon was especially evident while scientific enterprise in the USSR was being demolished during the 1985 -2000 capitalist revolution, which by 1992-93 decreased real salaries and science funds by almost a factor of ten.

One way to objectively evaluate the rank of scientists would be a free market system, where novel, nonobvious and truly innovative ideas of scientists are recognized as unequivocally protected by state intellectual property [15] and, as such, could be sold. However, instead of giving scientists the possibility of having their scientific ideas treated as intellectual property, society provides for them a surrogate: better pay and more prestigious positions, promotions and appointments for better scientists, often accompanied by a variety of prizes and awards.

It is common tradition in science that more creative and efficient scientists have to be better rewarded. As noted before, the process of appointments, promotions, prizes, awards and attribution of scientific credits is highly subjective and as a rule nontransparent. Therefore, it is prone to cronyism, corruption, and misappropriation of credit. A classic example of such misappropriation and unethical behavior is known as the Newton – Leibnitz quarrel (1709-1716) [16]. Even the best physicist of all time, being at the highest position in the hierarchy of scientists at that time, the Presidency of the Royal Society of London, exhibited unethical behavior while disputing priority matters with the coinventor of calculus Gottfried Leibnitz. Many cases of unacceptably late recognition, unfair competition and misappropriation of credit are also known in modern times. In 20th century physics, perhaps, the most famous and publicized cases are associated with names Leo Szilard and Gordon Gould. The coinventor of the nuclear reactor and atomic bomb, “Genius in the Shadows” [17] Leo Szilard, was not inducted into the National Inventors Hall of Fame until 32 years after his death. Likewise, laser coinventor Gordon Gould was inducted there in 32 years after the invention.

Corruption in science causes serious aberrations in the intellectual competition and the reward system. Compared to the business world, the corruptive behavior of scientists has practically never been punished; even more - it is often beneficial for them. It is well known that in science there exist three widespread types of corruptions: easy to hide , very difficult to detect, as a rule, known only to the participants exchange of favors ( quid pro quo); misattribution of credits when the achievement of a low-ranking scientist is ascribed to one with higher rank (the Mattew effect [18]); and, standing rather apart but to some degree a particular case of the Matthew effect - Stigler's law of eponymy (see web site http://en.wikipedia.org/wiki/Stigler's_law_of_eponymy), which postulates (to some degree hyperbolizingly) that "No scientific discovery is named after its original discoverer”, i.e. in science there does not exist an acceptable level of honesty and fairness in intellectual competition.

To eliminate subjectivity and to improve the existing RRS, scientists have instituted practically only two methodologies. One of them is the ranking of scientists based on the number of his/her publications. A pernicious derivative of this methodology is the notoriously known “publish of parish” paradigm. Despite the overwhelming criticism of this stereotype and the fact that more than 90% of scientific findings have never been used, in actuality still there is a strong correlation between the number of published papers and a scientist’s salary. In bioscience, for example, “each [additional] publication correspond to approximately 0.9% higher salary” [19].

Another method is based on the assessment of a scientist’s contribution using Internet statistics (see, for example www.altmetric.com and www.altmetrics.org) and/or the data provided by a well known citation index or its derivatives (such as H- index, for example). These data are available online through the Web of Science database.

However, the citation index has so many well-known drawbacks and deficiencies that its usefulness for practical purposes should be considered as very doubtful.

Some of the known drawbacks are the following:

1. In the frequent case of many coauthors, there is no information about the personal contribution of each one. Many scientific journals still do not have a mandatory requirement to provide a list of contributions. Often credit for everything goes to the most high ranking and established scientist [11] among the coauthors, even if he/she was only a figurehead – “a head or chief in name only”.

2. More than 90% of published scientific information has never been used in any way, and much of it is erroneous [2]. Both cited and citing papers could belong to this category.

3. It frequently happens that after a period of time (normally 5 – 10 years), the number of citations to the original, pioneering publication declines despite of the fact that the number of papers in a new field derived from the original paper may growing.

4. Citation practice can be politically biased. Scientists from one country tend not to cite scientists from another if there is a political animosity between the two countries.

5. Scientific books and reviews are cited more often than publications of new research. However, very often the book and review authors have done only limited research in the area.

6. The work of a scientist may simply be missed, forgotten, or pretended to be missed [20]. This is especially true when work is described in personal blogs or web sites of scientists, in low impact journals , in proceedings (abstracts) or in presentations at scientific conferences. According to the survey by B. Grant [21] “the vast majority of the survey's roughly 550 respondents - 85% - said that citation amnesia in the life sciences literature is an already serious or potentially serious problem. A full 72% of respondents said their own work had been regularly or frequently ignored in the citations list of subsequent publications.”

7. As mentioned by W.G. Schulz [20] “… there is a new trend: … to rank scientists by the number of citations their papers receive. Consequently, I predict that citation-fishing and citation-bartering will become major pursuits.” Here the apparently corrupted mechanism of favors exchange is predicted to be a major problem. We do not know exactly the prevalence or tendency of this in modern science.

8. M.V. Simkin and V.P. Roychowdhury [22] found that “simple mathematical probability, not genius, can explain why some papers are cited a lot more than other” They concluded that “ the majority of scientific citations are copied from the list of references used in other papers.” Because the offending authors often do not read or analyze cited papers they did not actually use the cited work as it might be in the case of honest citation

9. As mentioned above reviews and books are the most cited scientific publications, however the results of G. Mowatt et al [23] showed that in some fields of medical science 39% of reviews ” had evidence of honorary authors, 9% had evidence of ghost authors, and 2% had evidence of both honorary and ghost authors.” In other words, in 50% of the cases, authors of reviews, who could potentially gain the majority of citations, have not participated even in writing those reviews. The prevalence of this in the other fields of science is not known.

10. A rather high citation index does not always guaranty job security and protection against administrative tyranny of managers in science. It is often more important to get along with scientific bureaucrats [24].

11. Nobel Prizes boost citations: “citations are sometimes selected not because they are necessarily the best or most appropriate but to capitalize on the prestige and presumed authority of the person. This further distorts a picture that already contains a rich-get-richer element.” www.nature.com/news/2011/110506/full/news.2011.270.html .

12. It is also worth mentioning such a phenomenon as coercive citation. Scientists sometimes are coerced to cite papers from journals to which they submit their own research work [25].

More recently the existing RRS was criticized by many authors [7, 10-12]. Dr. J. Lane in her publication in Nature [12] asserts that current systems of academic performance measurement are inadequate. “Widely used metrics … are of limited use … science should learn lessons from the experiences of other fields, such as business.” It is apparent that future RRS should be based on metrics containing information about the personal scientific contributions of every scientist. However, as Lane emphasizes [12] there is a serious obstacle because “scientists do not like to see themselves as specimens under a microscope”; i.e. scientists do not mind seeing themselves as coauthors of papers, but they often do not like their contribution to a paper to be revealed in detail. To a great extent the modern RRS is based not on the assessment of the individual contributions of scientists but on authorship in scientific publications with many coauthors. Naturally, such a practice of multiple coauthors leads to dilution of individual creative contribution. As P.J. Wyatt noted [26] “authorship … expanded despite only the peripheral or negligible contribution by some, historically referenced in an acknowledgement section... What was actually the creativity of one or two authors become the work of a great many.”

One the most visible recent examples of complete failure (some commentators called it “institutional failure”) of the modern RRS, is related to the Nobel Prize for the discovery of the green fluorescent protein and a jobless scientist who was the first to discover this compound. “The 2008 prize was awarded to Osamu Shimomura, Martin Chalfie and Roger Y. Tsien for their work on green fluorescent protein or GFP. However, Douglas Prasher was the first to clone the GFP gene and suggested its use as a biological tracer. Martin Chalfie stated, "Douglas Prasher's work was critical and essential for the work we did in our lab. They could've easily given the prize to Douglas and the other two and left me out." Dr. Prasher's accomplishments were not recognized and he lost his job. When the Nobel was awarded in 2008, Dr. Prasher was working as a courtesy shuttle bus driver in Huntsville Alabama.” See http://en.wikipedia.org/wiki/Douglas_Prasher

In the well known fields of science (physics, chemistry etc.) Nobel prizes have been awarded approximately to 1 out of 10000 scientists. Let us assume their rank is at least 4 (log10000 = 4). Let us also assume that unemployment rate among scientists is 1%, i.e. if theoretically somebody is one worst scientist out of 100, his/her rank, at best, is -2. According to this reasoning the difference in the rank of unemployed and Nobelists is approximately 6 or more orders of magnitude. Therefore, if scientist who has de facto Nobelist rank (e.g., Prasher) is jobless the error of the reward system of science in determining the rank is around 6 orders of magnitude. As it will be seen further, the system suggested in this essay may can give rank to people like D. Prasher even higher than Nobel Prize winners.

This example once again indisputably shows that the accepted reward system of modern times must be dramatically transformed and improved. It needs to be emphasized, that the case with Prasher becomes visible because it has happened at the top of scientific hierarchy. It would be reasonable to speculate that at the bottom the situation is much worse. Maybe there is no one area of business in the world which have such a horrible errors in ranking in rewards as in sciences. It does not look, for example, that the history of mankind knows a case when a military person, who have not done anything wrong, with education, skill, abilities and achievements of a general was demoted and served only as a private soldier.

May be the most notable several attempts to develop a more or less objective system of scientists ranking was undertaken in the former Soviet bloc countries. For example, scientists and inventors applying for a patent or certificate of invention in the former USSR were required to provide a detailed list of contributions for every applicant. Even in the former USSR in the 1960s and 70s it was common knowledge among scientists (mostly jurists, who studied different aspects of scientists’ rights protection (see for example [27])) that without precise knowledge of personal contribution it is difficult or maybe impossible to design a logical, coherent and fair reward and rights protection system. In the 80-th, for example, in the former Soviet Union the Karpov system was developed in the Institute of the same name (www.nifhi.ru). According to that system the rank of scientists was determined by a thoroughly developed method of score assignment for different types of personal contribution. The drawback of the Karpov system was its extensive reliance on the number of publications.

A detailed summary of work prior to the 1980s on the evaluation of fundamental science efficiency and scientists' ranking was described in a book [29] by Yu. B. Tatarinov. He also suggested a semi-objective system for evaluation of the personal contributions of scientists. To a great extent, Tatarinov’s methodology was based on a system introduced (supposedly jokingly, but in reality more seriously than jokingly) by Russian physicist and Nobel Price awardee, Lev Landau [30]. He classified scientists a using 5-point logarithmic scale (lower score for higher rank). For example, A. Einstein was placed in the 0.5 class; N. Bohr, E. Schrödinger, W. Heizenberg, P. Dirac, E. Fermi in the 1.0 class. Landau himself placed in the 2.0 class.

Of course, any objective ranking system must be based on precise knowledge of accomplishments of scientists. Probably, the first attempt to bring out and report the contributions of scientists to the content of scientific publications was suggested by some medical journals in 1997 [28]. In 1999 the international scientific journal Nature introduced a regulatory norm encouraging coauthors to provide a list of contributions, and since 2009 this has become a mandatory requirement for all authors.

As described above there are two semi-objective methodologies to attribute a numerical value to the rank or creativity of scientists: based on the number of publications or the citation index. This essay proposes a novel, third, semi-objective method to obtain much more reliable numerical information to be used in the ranking of scientists.

The main goals of this essay is to suggest more reliable and precise information about the rank and creativity index of scientists; to make intellectual competition in science, as well as the RRS, more adequate, honest and fair; and more generally, based on this method, to increase efficiency and productivity of the whole scientific enterprise.

First of all, it is suggested that the future system of academic performance and in particular creativity index assessment should be based on an open Internet system and evaluation of the personal contributions of a scientist (not his/her laboratory, graduate students, postdocs or coauthors). Secondly, since no one knows better what scientists do than themselves, they are expected to be the best to summarize and describe their personal scientific achievements. The government, maybe NSF, or even a private company (agency) or several companies, will create an Internet-based web site (portal), to serve as a ranking agency with open to anyone access. Every agency can have its own metrics. Any scientist who wants to know her/his rank can submit self-collected, self-summarized data about his/her personal deemed useful scientific contributions to all or only one ranking agency of his/her choice. The self-summarized, self- described data will be open to everyone through the Internet. In the first approximation, scientists should be evaluated in three categories: as generators of novel, useful, nonobvious ideas, concepts and hypotheses; as developers; and as examiners-reviewers of other scientists ideas. In science, common definition of creativity has traditionally been based on the ability to generate useful, original, nonobvious ideas. A similar, but more elitistic definition, was given by M. Csikszentmihalyi [31]: “Creativity is any act, idea, or product that changes the existing domain, or that transforms an existing domain into a new one.”

This essay discusses only about metrics related to the creativity rank of scientists, i.e. their ability to generate original, useful, nonobvious ideas, concepts, proposals, hypothesis (subsequently referred to as ideas). It is not uncommon for a meritorious scientist to assess the value of an idea from rather briefly expressed information; the higher rank of a scientist, the less information he/she needs to understand and characterize an idea. Here under term of idea it will be mostly understood a minimal amount of information which is needed for at least one scientists to grasp the essence, nature, significance and meaning of a novel scientific knowledge presented by an author. Experienced scientists know that novel, nontrivial, valuable, understandable ideas might be rather briefly expressed - several sentences - but sometimes even tens of pages is not enough.

The beauty of ideas is that, as a rule, they have only one author and can, therefore, easily be used in individual assessment metrics. It is important to note that the existing is modern science tradition not to distinguish and separate the evaluation and attestation of developers of ideas and the generators is, at best, confusing and counterproductive. This is because when discussing ideas, we are, first of all, asking what is suggested, but in the case of development, we are asking how quickly it is done, how much money was spent on the development, what is the quality of the accomplished research work. It is hypothesized that the existence of such a contradictory mess in evaluation of scientists is far from being noble, unselfish and dictated by the interests of the whole society. Most probably it is due to the fact that both groups have fundamentally different, antagonistic, maybe even adverse interests. Individuals who generate novel, nonobvious ideas would not mind owning them, to possess as long lasting intellectual property. But developers, who are the majority of scientists, actively lobby, instill, propagandize and brainwash scientific community that ideas have to be free and they do not have any or minimal value until developed. It is evident that ideally developers should be responsible for the quality, speed and price of the work whereas the generators of ideas and scientists who evaluated them should be responsible for the essence of idea and the kind of work is under development. Not rarely one can see a poorly and inadequately developed brilliant idea and more often a brilliant development of a trivial and useless idea. I.e. ideas and developments have their own independent lives and as such should be independently rewarded. Normally, development of a novel idea can be planned and performed by several or even hundreds of independent individuals, companies or groups of scientists. For example, the idea expressed in this essay is rather simple, and for sure it may be further developed and realized by hundreds of groups, companies and individuals. Because there is no way to plan the appearance of nontrivial ideas and hypotheses, the generators of ideas should maybe have a more flexible agenda and working environment, without the job security level offered to developers. The idea generators of the future may be freelance scientists loosely affiliated with one or several universities or/and other scientific institutions.

In their submission to the Internet based database of ranking agencies scientists should briefly describe one or several ideas, present a statement that they believe they are the first who proposed it and then describe where and how the idea (ideas) was used and/or is useful in the world. The latter aspect could be evidence that their idea led to generation of further scientific knowledge or how the idea was further developed by other scientists and appeared in publications of other scientists. Even if the applicant-author of an original idea had never been cited in any relevant publications derived from his/her idea, (as happens rather often in modern science) the author of the idea can cite the authors as users of the scientific knowledge – the idea – that he/she generated. Furthermore, scientists can include additional evidence of their ideas usages: such as: appearance in patents and commercially available products based on this patent (even if the scientist-applicant was not author or coauthor of the patent); use by teachers to teach students; use by scientists and graduate students from other countries, universities or departments in their research work. Practically, any information can be included explaining how and where his/her idea was useful and beneficial for people and society, businesses, universities, countries, i.e. how the idea (ideas) had distinguishable positive impact. Since the author-applicant will be competing with other scientists, they are expected to be compelled to assess the correctness and relevance of the material included in self-described information about his/her achievements. It is important to emphasize that the scientists-reviewers do not have to evaluate the rank of the applicants, they only need to check and affirm or disapprove the correctness and relevance of the applicant’s web pages. Such an approach has deep philosophical roots. Following Karl Popper’s philosophy, scientists-reviewers here do not have to verify a scientist’s application; they only need to determine its falsifiability. They are expected to be bound to do so very carefully, because all applications and reviews will be available in open access. Therefore, for them the only beneficial tactic would be to do this job as correctly and honestly as possible. After that, the owner of the web portal (i.e. ranking agency), which could be the government, or/and NSF, and/or a private company, will check the applicant’s claims, and reviewer’s comments, evaluate disclosed information, and assign the rank to the scientist - applicant. Depending on their financial situations scientists can also personally evaluate their indexes-ranks or pay the company for this service.

A variety of metrics, parameters, characteristics can be taken into consideration for the rank evaluation; e.g. the total number of papers published by other scientists, where the applicant’s idea was used; how many years and how many scientists have been trying to address a certain problem, which was solved thanks to the idea suggested by the author; how many years had passed since the applicant’s scientific achievement lasted without major modification, etc. Then the scientists can claim: I have rank, let us say, AAB, from one ranking agency, ABB from another, etc. Or the rank may be in the form of a set of digits, let us say 5-2-3-x. First digit might be the logarithm of the number of papers published by authors who used in some way the idea generated by the applicant. For example, if this number is 100,000, the first digit would log(100,000) = 5. Second digit might be the logarithm of the number of teachers who have used applicant’s knowledge for instructional purposes. The third digit might be the logarithm of product of number of years times the number of scientists who attempted unsuccessfully to solve a problem. For example, a certain mathematical theorem was not proven for 100 years and on average 10 mathematicians were working on this task. In this case the rank should be 100*10 = 1000, log(1000) = 3. That fourth symbol “x” in the rank might indicate that the applicant does not claim any rank for a parameter related to how many years passed since a certain well known scientific achievement was accomplished by a scientist-applicant and never improved after.

Time will tell which company can better rank a scientist, provide the best reflection of his/her accomplishments, and determine which characteristics should be taken into account further. The business world has used these types of evaluations for many years; e.g. credit ratings by Standard & Poor’s, Moody’s, or the Fitch group. Of course, their ratings are not always perfect, but the situation would be much worse if the business world used a subjective, imprecise and unreliable rating system, such as that used in science, where established scientists evaluate and rank other scientists. Imagine the corresponding situation in the world of business - large corporations evaluate and rank their competitors and also middle-sized and small companies.

The following information will be accepted by a ranking agency/company:

1. Original, nonobvious ideas of scientists.

2. Information, provided by the author of an idea or anyone else, about where and how the idea was used in the world.

3. Information about the potential rank and/or value of the idea (before it was used somewhere) by scientists reviewers.

4. Information on the self-evaluated creativity rank of scientist based and taking into account all his/her ideas.

Simultaneously the ranking company can accept original ideas of scientists, i.e. as deposits in a bank of ideas. If the idea is made available by open access, any scientist can provide an evaluation of it. Then, later the ideas can have not only a history of their usage but also a history of their evaluation prior to development. Having this additional information about ideas the company will allow to accept self-evaluating information about the ranks not only of idea generators but also of experts –evaluators-reviewers [7].

There is yet another important advantage of the suggested creativity index/rank evaluation business method. If the self-described data about creativity rank are combined with data about all ideas suggested by the applicant not only can the creativity rank be determined but also the efficiency of his/her scientific work can be monitored. Statistically, most ideas suggested by scientists belong to the category of wrong, premature or not useful. The ratio of useful ideas to useless can provide data about the efficiency of work performed by a scientist. All submitted ideas of scientists could be evaluated, and reviewed by their colleagues if a review is solicited or even without solicitation. Instead of writing proposals to funding agencies scientists simply can send a link to his/her ideas, which may already have sufficient number of positive reviews.

In his interview to TIME Magazine [32] Nobel Prize winner economist Joseph Stiglitz recently said: ”in terms of basic statistics, the US has become less a land of opportunity than other advanced industrialized countries … by denying opportunity to people at the bottom, we are actually hurting our whole economy”. The same gloomy environment is hurting modern scientific enterprise. Many US scientists, especially those at the bottom, with good, promising ideas have increasing difficulty in obtaining funding for their research. Modern science about science does not know exactly from where most promising ideas coming from. However, to many it will not be surprising and paradoxical discovery that it is from the bottom. Due primarily to “bureaucratic jungle” system, those scientists and inventors have unacceptably too many, obstacles and hurdles to advance, develop, and bring into fruition their innovations. A simple social science innovation, first initial step to eradicate, demolish, and obliterate this unhealthy environment has been suggested in this essay.

References

1. Report of the NAS, RISING ABOVE THE GATHERING STORM,

REVISITED. Rapidly Approaching Category 5, 2010, http://www.nap.edu/catalog/12999.html

2. 19. J.P.A. Ioannidis, Why most published research findings are false. PLoS Med 2(8): e124, 2005.

3. Against the Tide: A Critical Review by Scientists of How Physics and Astronomy Get Done. Martín Lopez Corredoira (Editor), Carlos Castro Perelman (Editor), Universal publishers, 2008.

4. A. Casadevall, F.C. Fang, Reforming science: Methodological and Cultural reforms; Structural Reforms. Infection and Immunity, v.80, No 3, 2012, p. 891-901.

5. Frederick Southwick , July 1, 2012, The Scientist, All’s Not Fair in Science and Publishing. False credit for scientific discoveries threatens the success and pace of research.

http://the-scientist.com/2012/07/01/alls-not-fair-in-science-and-publishing/

6. Fred Southwick , May 9, 2012, The Scientist . Opinion: Academia Suppresses Creativity. By discouraging change, universities are stunting scientific innovation, leadership, and growth. http://the-scientist.com/2012/05/09/opinion-academia-suppresses-creativity/

7. O. Matveev: Business method for novel ideas and inventions evaluation and commercialization. June 2006: Patent application, US 20060129421.

8. O. Matveev, A Synergistic Model for Innovation Policy and Management in the Physical Sciences, Proceedings of the 1st ISPIM Innovation Symposium, Singapore, 14 -17 December 2008.

9, O. Matveev, http://rankyourideas.com; http://www.researcher-at.ru/index.php?topic=10043.0.

10. Lee Smolin, Why No “New Einstein”?, Physics Today, June 2005, p.56- 57.

11. P. A. Lawrence, Rank injustice. Misallocation of credit is endemic in science. Nature, v. 415, 21 February 2002, p.835,836.

12. J.Lane, Let’s make science metrics more scientific, Nature, v. 464, 25 March 2010, p.488,489.

13. P.J. Feibelman, Courting Connections, Nature, v.476, 25 August 2011,

p.479.

14. P.J. Feibelman, A Ph.D. Is Not Enough! , Basic Books Publ., 1993.

15. S. P. Ladas, Patent, Trademarks, and Related Rights, Harvard University press, 1975.

16. A.R. Hall, Philosophers at War. The Quarrel Between Newton and Leibnitz, Cambridge University Press, 1980.

17. William Lanouette, Genius in the Shadows : A Biography of Leo Szilard : The Man Behind the Bomb, University of Chicago Press December 1, 1994.

18. R.K. Merton, The Mattew Effect in Science, Science, January 5, 1968, p.56-63.

19. R. Freeman, E. Weinstein, E. Marincola, Competiton and Careers in Biosciences, Science, v.294, 14 December, 2001, p.2293-2294.

20. W.G. Schulz, Giving proper credit, Chemical & Engineering News, March12, 2007, p.35 - 38.

21. Bob Grant, Citation amnesia: The results. http://www.the- scientist.com/blog/display/55801/ , 25th June 2009.

22. M.V. Simkin, V.P. Roychowdhury, Copied citations create renowned papers, arXiv:cond-mat/0305150, May 8, 2003.

23. G. Mowatt, L.Shirran, J.M. Grimshaw, D. Rennie, A. Flanagin, V. Yank, G. MacLennan, P.C. Gotzsche, L.A. Bero, Prevalence of Honorary and Gost Authorship in Cochrane reviews, JAMA, June 5, v. 287, No.21, 2002, p.2769.

24. Troitsky Variant (written in Russian), January 17, 2012, p.3. Letter to Editor. http://trv-science.ru/2012/01/17/

25. A. Wilhite, E.A. Fong, Coercive Citation in Academic Publishing, Science, V. 335, Feb 2012, p.542 – 543

26. P.J. Wyatt, Too many authors, too few creators. Physics Today, April 2012, p.9,10.

27.Ионас В.Я. Произведения творчества в гражданском праве .( V.Ya. Ionas, Creative works in tort.)–М: Юридическая литература. –1972. –168 с.

28. R. Smith, Authorship is dying: long live contributorship, BMJ, 1997, v.315, p.696

29. Yu. B. Tatarinov, The Problem of Fundamental Science Efficiency Evaluation (written in Russian), Nauka, Moscow, 1986.

30. M. Bessarab, Landau. Pages of his life, Moscow Worker Publ., 1978, p.66.

31. Mihaly Csikszentmihalyi, Creativity. Flow and the Psychology of Discovery and Invention, HarperCollins Publishers, 1996.

32.Interview with Nobel-Prize winner economist Josepf Stiglitz, Time Magazine, June 11, 2012, p.66.

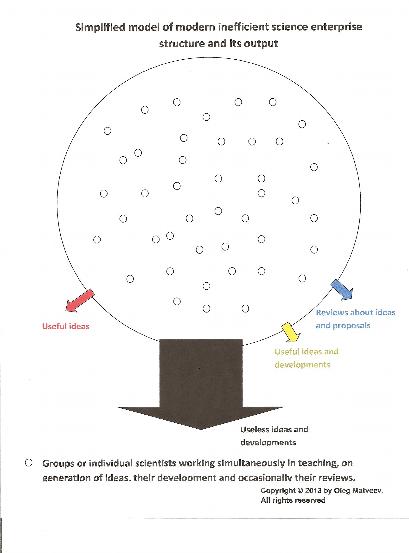

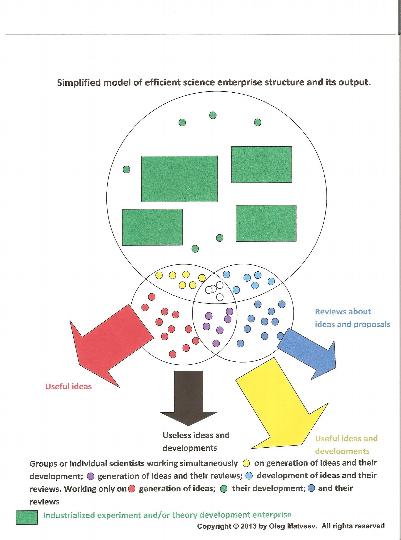

Below on two pictures I tried to show a simplified model of a modern science enterprise structure and its output versus structure and output which might be achieved in case if a novel efficient model is implemented. Allegorically first picture can be envisioned as "colorless" science with excess of "colorless" scientists. The second one is representing "colorful" science, which, there are no doubts, might be much more efficient.

The question arise what might be the best proportion of different "colors" to get as much as possible useful knowledge from the scientific enterprise?

Copyright © 2013 by Oleg Matveev. All rights reseved

This assay was published on February 4, 2013

All questions and comments, please, send to my email laserol@gmail.com